Site audits are an essential part of SEO. Regularly crawling and checking your site to ensure that it is accessible, indexable and has all SEO elements implemented correctly can go a long way in improving the user experience and rankings.

However, crawling enterprise sites effectively and efficiently is always a challenge. The aim is to run crawls that finish within a reasonable amount of time without negatively impacting the performance of the site.

Recommended Reading: The Best SEO Audit Checklist Template to Boost Search Visibility and Rankings

The Challenges of Crawling Enterprise Sites

Enterprise sites are constantly being updated. Keeping up with these site changes requires that you audit the site regularly. Site audits help in identifying issues with the site, and running them regularly helps with taking snapshots of the site which are trended over time.

But it can be difficult to run crawls on enterprise sites. After all, there are many inherent difficulties that come with it: many millions of URLs, time and resources can be limited, staleness of crawl results (i.e. if a crawl takes a long time to run, things could have changed by the time it’s finished), etc. However, these challenges should not deter you from crawling your site regularly. They are simply obstacles that you can learn about and optimize.

In fact, there are multiple reasons why you need to crawl an enterprise site:

- Ensure that all technical SEO elements are implemented correctly. Having a crawler that not only crawls your site but also provides you a list of both on- and off-page issues is critical.

- Crawlers that can crawl a website just like Googlebot would. This helps you preemptively get an idea of how your site shows to the search engine.

- Running crawls pre- and post-migration is a great way to ensure that the website changes do not affect search engine visibility.

- Auditing particular elements of your site, such as images, links, video, etc. to ensure information on the site is relevant.

- Hreflang, in case your site is global to ensure that the correct version and language of your site is displayed based on where the user is accessing it from.

- Canonical audits to ensure that the preferred pages on your site are indexable and accessible to the search engine and users.

Recommended Reading: SEO Crawlability Issues and How to Find Them

What to Keep in Mind When Crawling Enterprise Sites

There are certain questions you need to ask yourself before you run your crawl so you can overcome the challenges listed above. That is, the crawl doesn’t interfere with your site’s operations and pulls all the information you need that is relevant to you.

#1. Should I run a JavaScript crawl or a standard crawl?

When setting up a crawl, you have two options in determining the type of crawl: standard or JavaScript.

Standard crawls only crawl the source of the page, meaning that only the HTML on the page is crawled. This type of crawl is quick and is the recommended method, especially if the links on the page are not dynamically generated.

JavaScript crawls, on the other hand, wait to render the page as they would within a browser. They are much slower than regular crawls, so should be used selectively. But over the years, more and more sites have started to use JavaScript, so JavaScript crawling may be necessary.

Google began to crawl JavaScript in 2008, so the fact that Google can crawl these pages is nothing new. However, the problem was that Google was not able to gather a lot of information from JavaScript pages, which limited the pages’ ability to be rendered and found over HTML websites. Now, however, Google has evolved. Sites that use JavaScript have begun to see more pages crawled and indexed over the past year, which evidently means that Google is evolving its support of this language.

Determining the type of crawl is one of the most important things to consider.

If you are not sure which type of crawl to run on your site, you can disable JavaScript and try to navigate the site and its links. Do note: sometimes JavaScript is confined to content and not links, so in that case it may be okay to set up a regular crawl.

You can also check the source code and compare it to links on the rendered page, inspect the site in Chrome, or run test crawls to determine which type of crawl is best suited for your site.

#2. How fast should I crawl?

Typically, crawl speed is measured in pages crawled per second and is the number of simultaneous requests to your site.

We recommend fast crawls – the longer a crawl runs, the older the results are at the end of the crawl. (Remember one of the challenges of crawling enterprise SEO sites is staleness of the crawl.) You should crawl as fast as your site allows you to. Crawling using a distributed crawl (i.e. crawls using multiple nodes with separate IP's that run multiple parallel requests against your site,) could also be used.

However, it is important to know the capabilities of your site infrastructure.

A crawl that runs too fast can have a negative impact on the performance of your site. But also keep in mind that it is not necessary to crawl everything on your site – more on that below.

#3. When should I crawl?

Although you can run a crawl at any time, it’s best to do it at off-peak hours or days. Scheduling your crawls is especially useful because you can set the crawl to automatically run at these times. Crawling the site when there is lower traffic to the site means there is less of a chance of the crawl slowing down the site’s infrastructure. If there is a crawl occurring at a high traffic time, the network team may rate limit the crawler if the site is being negatively impacted.

You’re also able to identify issues and report on long-term success with regular recurring crawls.

Recommended Reading: What Are the Best Site Audit and Crawler Tools?

#4. Does my website block or restrict external crawlers?

Many enterprise sites block all external crawlers, so you’ll have to remove any potential restrictions before the crawl. You need to ensure that your crawler has access to your site.

At seoClarity, we run a fully managed crawl – there are no limits on how fast or how deep we can crawl, so we recommend whitelisting. The number one reason we’ve seen crawls fail is because the crawler is not whitelisted.

#5. What should I crawl?

It’s important to know that it is not necessary to crawl every page.

Dynamically generated pages change so often that the findings could be obsolete by the time the crawl finishes.

We recommend running sample crawls. A sample crawl across different types of pages is generally enough to identify patterns and issues on the site. You can limit it by sub-folders, sub-domains, URL parameters, URL patterns, etc. You can also customize the depth and count of pages crawled. At seoClarity, we set 4 levels as our default depth.

You can also implement segmented crawling. This involves breaking down the site into small sections that represent the entire site. This offers a trade-off between completeness and the timeliness of the data.

There is typically no need to crawl every page of the site every time you run a crawl. Full site crawls take a long time to finish, and may not help with what you’re trying to achieve. There will, of course, be some cases where you do want to crawl the entire site, but this depends on your specific use cases.

#6. What about URL parameters?

This is a common question that comes up, especially if your site has faceted navigation (i.e. filtering and sorting results based on product attributes) – it is recommended to remove parameters that lead to duplicate crawling.

A URL parameter is information from a click that’s passed on to the URL so it knows how to behave. They are typically used to filter, organize, track, and present content, but not all parameters are useful in a crawl. They can exponentially increase crawl size, so they should be optimized when setting up a crawl. And while you can remove all URL parameters when you crawl, this is not recommended because there are some parameters you may care about.

You may have the parameters you want to crawl or ignore loaded into your Search Console. If this information is set up, you can send it to us and we can set it up for our crawl too.

How Does seoClarity Solve This Problem?

As you’ve seen, a lot can go into setting up a crawl. You want to tailor it so that you’re getting the information that you need — information that will be impactful for your SEO efforts.

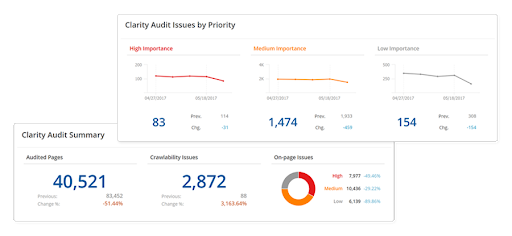

Fortunately at seoClarity, we offer our site audit tool, Clarity Audits, which is a fully managed crawl.

We work with clients and support them in setting up the crawl based on their use case to get the end results they want.

Clarity Audits runs each crawled page through more than 100 technical health checks, and better yet, there are no artificial limits placed on the crawl. You have full control over every aspect of the crawl settings, including what you’re crawling, the type of crawl — standard or JavaScript — depth of the crawl, and crawl speed. We help you optimize and audit your site, thus helping with the overall usability of the site.

Our Client Success Managers also ensure you have all of your SEO needs accounted for, so when you set up a crawl, any potential issues or roadblocks are identified and handled. Think of your Client Success Manager as your main point of contact at seoClarity.

Want to perform a full technical site audit but unsure where to begin? Use this free site audit checklist to guide you through each step of the process, including the information covered in this post.

Conclusion

It’s important to tailor a crawl to your specific situation so that you get the data that you’re looking for, from the pages that you care about. Most enterprise sites are, after all, so large that it only makes sense to crawl what is relevant to you. Staying proactive with your crawl setup allows you to gain important insights while ensuring you save time and resources along the way. seoClarity makes it easy to change crawl settings to align the crawl with your unique use case.

Comments

Currently, there are no comments. Be the first to post one!